I run this rubocop extension in my VS Code

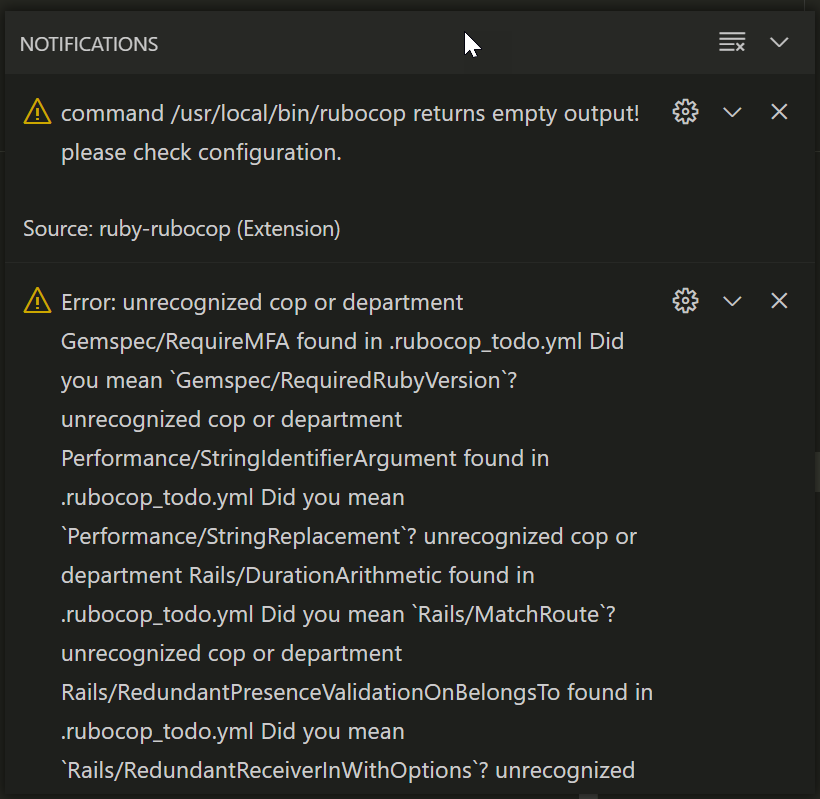

Recently the project I was on did a Ruby update and my rubocop stopped working with an error like this

The issue here was that the rubocop in the project was newer than the globally installed rubocop so it was returning empty output. This extension doesn’t look like it uses rbenv properly so I needed to globally update rubocop which I did with

/usr/local/bin/rubocop -v -> 1.22.3

sudo gem install rubocop

/usr/local/bin/rubocop -v -> 1.26

I still had some errors about missing rules and needed to also do

sudo gem install rubocop-rake

sudo gem install rubocop-rails

sudo gem install rubocop-performance

Ideally I’d like this extension to use the rbenv version of ruby but this gets me sorted for now.